Introduction (What we’ll learn)

The execution cost of AWS Lambda is dependent on two things: the memory and the execution time. You may have noticed that the cost is calculated in GB-s. If your lambda is dominated by tasks that are I/O heavy (network-bound tasks), then there is a definite scope for reduction in the execution time, and thus the cost, using threading.

In this tutorial, we will see how threads can be used within AWS Lambda. Please note that this tutorial will only be useful for network-bound tasks. Using multi-threading in AWS Lambda will not be beneficial for computationally heavy tasks as Python’s Global Interpreter Lock (GIL) will kick in and thwart any efforts at parallelism using threads. You can read more about Python’s GIL from the several results that appear on performing a quick Google search.

If you have a computationally heavy task, and have enough memory to support more than one vCPU, you can consider multiprocessing. A nice tutorial on multiprocessing in AWS Lambda can be found here. Note that AWS Lambda provides you with a single vCPU till approx. 1.8GB of memory.

The structure of this tutorial will be as follows:

- Prerequisites

- Threading Example – Code Walkthrough

- Execution time comparison between threaded and non-threaded operation

- Execution time comparison between threading and multiprocessing

- Closing Thoughts

Prerequisites

It is assumed that you are familiar with AWS Lambda and know how to create/ deploy lambda functions. It is also assumed that you are familiar with Python, and plan to use the Python runtime for your AWS Lambda functions.

Threading Example – Code Walkthrough

Alright, let’s get our hands dirty. We will keep our code very simple. Within each thread, we will just ping a URL (https://iotespresso.com), to simulate a network operation. The sample lambda code is given below:

from threading import Thread

import time

import urllib

def thread_function(index):

f = urllib.request.urlopen("https://iotespresso.com")

print(index)

def lambda_handler(event, context):

# TODO implement

threads = []

for index in range(3):

x = Thread(target=thread_function, args=(index,))

threads.append(x)

x.start()

for thread in threads:

thread.join()Each thread takes an index as an argument, which we are printing within the thread, to see which thread executes first. We start by initializing each thread using the Thread() constructor, then start it using the .start() method, and then wait for it to complete, using the .join() method.

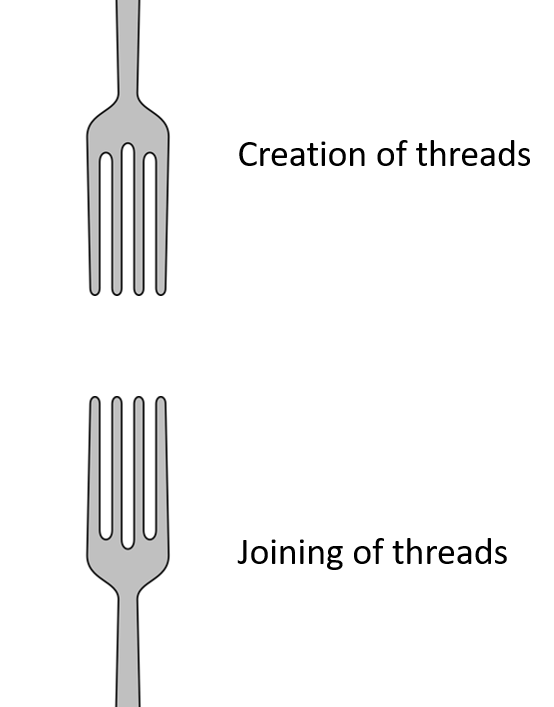

On a side note, you may be wondering why the function that waits for the threads to finish operations is called join(). I had this question when I first learnt about this function. Hopefully the fork analogy in the image below will answer that.

Now that the threading code is clear, let’s look at what the non-threaded or sequential equivalent of the above code would look like. It is quite simple, as you can see below:

import time

import urllib

def thread_function(index):

f = urllib.request.urlopen("https://iotespresso.com")

print(index)

def lambda_handler(event, context):

# TODO implement

for index in range(3):

thread_function(index)Execution time comparison – Threaded and non-threaded operation

The above two lambda functions (threaded and sequential) were invoked with different memory settings. Here are the results related to the execution times (all values in milliseconds):

| Threaded Time (ms) | Sequential Time (ms) | |

| 128 MB | 1350 | 3089 |

| 512 MB | 1148 | 2745 |

| 1024 MB | 914 | 2686 |

| 2048 MB | 977 | 2647 |

As you can see, the execution times for the sequential lambda are 2.2 to 2.9 times higher than the threaded lambda. In the ideal world, they should be exactly 3 times higher, because we have 3 threads. However, there are some overheads involved in the creation of the threads, and also, there may be network fluctuations between different invocations.

With the above experiment, one thing is clear: for a use case like the one above, the user is definitely better off using threads, from the cost perspective.

Execution time comparison between threading and multiprocessing

The curious readers may be wondering how threading compares to multiprocessing. We would expect multiprocessing to take slightly higher execution time, because the creation of a separate process is more resource expensive and time expensive, as compared to the creation of a thread. Let’s verify this hypothesis.

The code for multiprocessing will be very similar to threading, as you can see from the code snippet given below:

from multiprocessing import Process

import time

import urllib

def process_function(index):

f = urllib.request.urlopen("https://iotespresso.com")

print(index)

def lambda_handler(event, context):

# TODO implement

processes = []

for index in range(3):

x = Process(target=process_function, args=(index,))

processes.append(x)

x.start()

for process in processes:

process.join()Let’s compare the execution time for the threading and multiprocessing lambdas for different memory settings:

| Threading Time (ms) | Multiprocessing Time (ms) | |

| 128 MB | 1384 | 1970 |

| 512 MB | 972 | 1158 |

| 1024 MB | 996 | 1082 |

| 2048 MB | 893 | 902 |

As expected, the threading time is always lesser than the multiprocessing time. The difference in time, though, keeps reducing as higher memory is provided to the lambda. One explanation is that at higher memory settings, more resources and higher computation is available to the lambda, which results in faster process creation.

Closing Thoughts:

This post explains the benefits of using threading in AWS Lambda to save on execution times, and thus, the cost. However, I’d reiterate that you shouldn’t use threads for computationally intensive tasks (thanks to Python’s GIL). Use multiprocessing if you have to introduce parallelism in the system, and have a memory large enough to provide more than 1 vCPU (only 1 vCPU till approx. 1.8 GB of memory).

Another thing to consider is memory usage. For this example, there was hardly any difference in the memory used by the sequential and threaded versions. However, if each of your threads uses a lot of memory, then maybe you will exceed the memory allocated to your lambda function. You may have to increase the memory allocation in such cases.

Also note that there is always an element of network fluctuations in the reported times. If you want to be very definite, you can replace

f = urllib.request.urlopen("https://iotespresso.com")

with

time.sleep(1)This will introduce a definite 1 second delay in the thread_function or the process_function. The reason I did not use the time delay in the examples was that I wanted to demonstrate a more real-world example.

In another post, we will discuss the sharing of data across threads within a lambda.

In case you are looking for a full-fledged course on AWS Lambda, I personally found this one on Udemy to be quite good. If you are looking for a complete AWS Solutions Architect course, I’ll recommend this one on Udemy.