In this article, we will create a function in Python that will help us backup a PostgreSQL table to the Azure blob storage, in the form of a CSV.

You will first need to create a Storage account in Azure. Follow the steps here to create your storage account. Once created, you will need the connection string for that account.

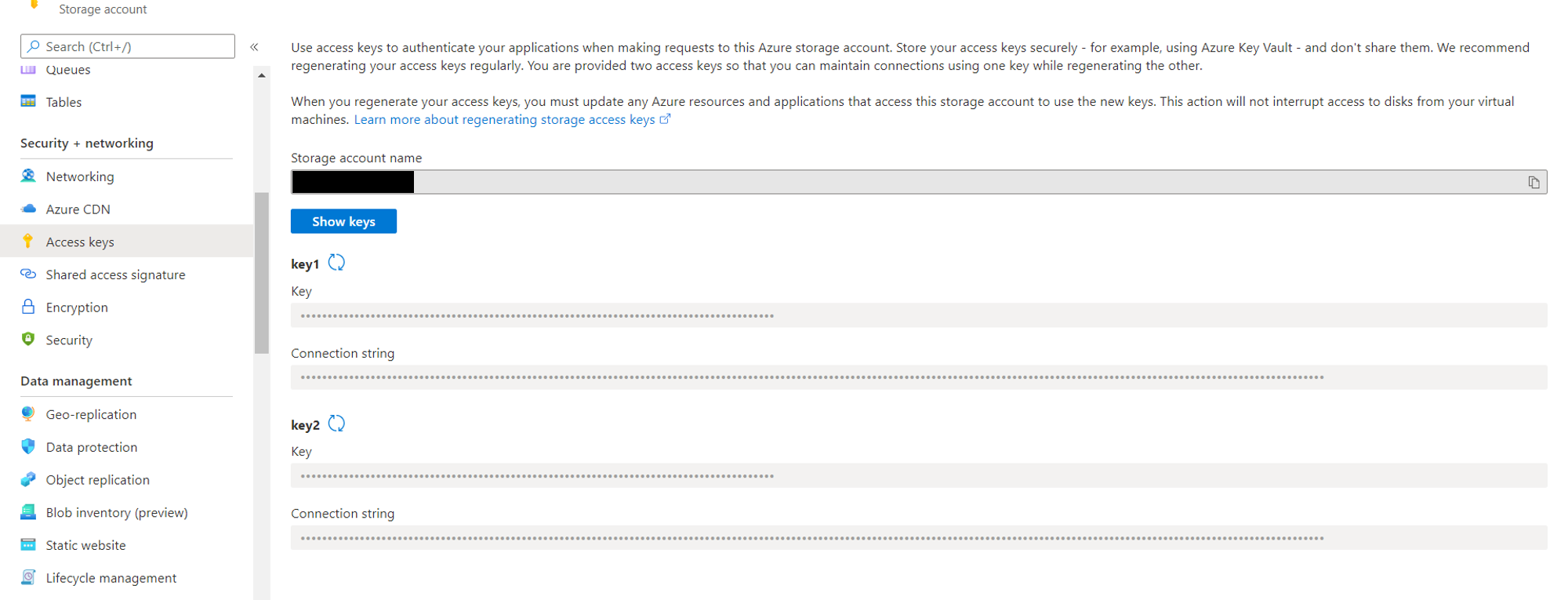

Navigate to the created storage account in the Azure portal, and go to the ‘Access Keys’ section within ‘Security + networking’. Click on ‘Show Keys’ and copy the connection string for one of the keys, say key1.

Once the connection string is obtained, get the credentials of your DB and the name of the table you want to backup.

Once you have all this, you can construct your backup function as follows:

import logging

import psycopg2

import io

from datetime import date

from azure.storage.blob import BlobClient, ContainerClient

#DB Credentials

DB_NAME= 'mydb'

DB_USER = 'mydbuser'

DB_PASSWORD = 'mydbpassword'

DB_HOST = 'mydbhost'

TABLE_NAME = 'mytable'

#Storage Account details

CONNECTION_STRING = 'myStorageAccountConnStr'

CONTAINER_NAME = TABLE_NAME + 'backup'

def dataBackup():

#Connect to the DB

conn = psycopg2.connect(

database=DB_NAME,

user=DB_USER,

password=DB_PASSWORD,

host=DB_HOST,

port="5432"

)

#Get the table's data in CSV format

cursor = conn.cursor()

output_file = io.BytesIO()

cursor.copy_expert("COPY " + TABLE_NAME + " TO STDOUT WITH CSV HEADER", output_file)

output_file.seek(0)

#Create a container if it doesn't exist

try:

container_client = ContainerClient.from_connection_string(

conn_str=CONNECTION_STRING,

container_name=CONTAINER_NAME

)

container_client.create_container()

except Exception as e:

logging.error(str(e))

pass

#Store the backup of the file in that container

#The file is named as current_date.csv

blob_name = str(date.today()) + '.csv'

blob_client = BlobClient.from_connection_string(

conn_str=CONNECTION_STRING,

container_name=CONTAINER_NAME,

blob_name=blob_name)

blob_client.upload_blob(output_file)

logging.info("Data Uploaded")

output_file.close()

Replace the dummy DB credentials in the above snippet with your actual DB Credentials, TABLE_NAME with the name of the table you want to backup, and CONNECTION_STRING with the storage account connection string you copied earlier.

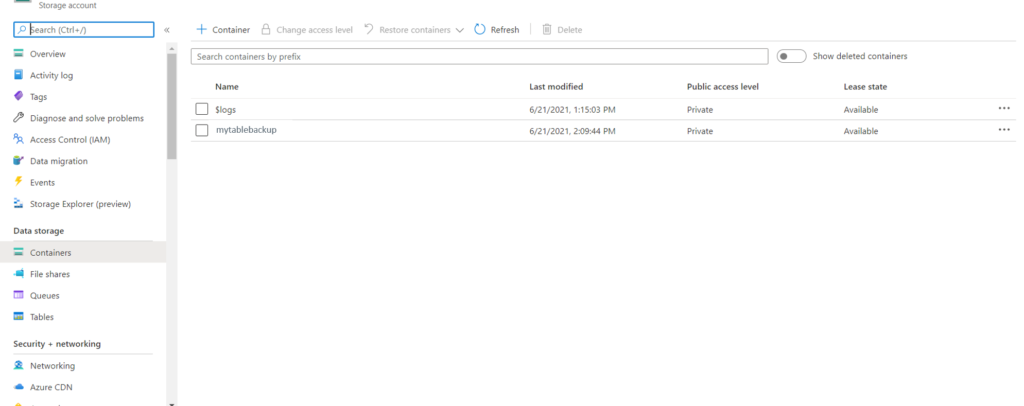

As you can see, we are using the azure-storage-blob package for interacting with our storage account. Make sure to include this package in your requirements.txt file if you are hosting this function on a cloud platform. Once you deploy this function, you will be able to see the container of your backup CSV within ‘Containers’ and the CSV within that container.

You can add the above function in a cron service (maybe a Timer Triggered Azure Function) to perform daily/weekly/monthly backups of your table.

Found this post helpful? Then check out further posts on Azure on iotespresso.com. Also, follow IoT Espresso on Twitter to get notified about every new post.

If you are planning to appear for the Azure Administrator Exam Certification, check out this course on Udemy.